Interactive LLM chat

As part of building out yerr, I have been thinking a lot about the UX of LLMs. The way the product works is that you chat with an AI agent to tell it about your preferences—capturing all the necessary details of finding an apartment, from major ones like budget and move-in date to more subtle ones like lighting, distance from certain locations, and so on. It should feel like talking to a real-life person. To set the scene, imagine the time you worked with a broker, real estate agent, or an assistant.

Originally, I just started with a plain chat interface. It is the iconic interface we have become so accustomed to over the past couple of years. For all its simplicity, it works really well and does a lot. I could in fact have just called it done there and then, but I wanted to see if I could make some small enhancements, even if only marginal.

The competing paradigm has been voice, but it still continues to suck for the most part. I force myself to use the voice app by OpenAI, but I never really end up forming a habit around it. Whereas with the chat interface, I find myself using it more and more. Chat is elegant, sticky, and habit-forming, while voice is clunky and underwhelming.

With my dislike for voice in general, I decided to see if I could add some kind of interactivity to chat. The friction I faced when building the agent was that sometimes it felt like the back-and-forth took too long. And some questions, like location and budget, felt more suited to a questionnaire-like structured format with validation. But I didn't want to split the two components and make the experience feel disjointed.

So I had an idea: what if I casually sprinkled structured questions into the AI agent conversation so it naturally flowed and felt seamless? The web era has created so many great interactive components—dropdowns, radio buttons, sliders, date ranges, and so on. What if I could just inject those into the conversation?

Chat is pretty much made from two components:

- Rich text for LLM output

- Text field for human input

Most language models generate plain text, but they have also been fine-tuned to generate markdown format for readability. It is perhaps underestimated how useful markdown generation has been in improving the experience. If you have ever read raw LLM output, it can feel jarring, especially when it is generating code.

So naturally, markdown became the obvious place for me to investigate how to do this. I looked into how the big streaming libraries like ReactMarkdown render code outputs. The way it works is that the LLM generates code output wrapped in backticks along with the programming language. For example, to generate Python it outputs:

```python

def foo():

return 1+2

```

ReactMarkdown just does markdown things, and when it detects backticks, it triggers all the good stuff like syntax highlighting. The great thing is that it is super customizable—you can pretty much intercept the code block and render it however you want. So I had an idea: what if I created a question language to intercept that block?

The first step was prompting the AI that if it wanted to ask a question, it should use the following format:

```question

{

"id": "move_timeline",

"type": "date",

"label": "When do you need to move in?",

"description": "Even a rough timeframe helps"

}

```

Basically, it's just a JSON object. The type sets the kind of interactive component that we render. In this example it's a date type, so we show a date picker. The id serves as a way to link the question with the answer. The rest are descriptive fields that help further enhance the UI. On the frontend side we do the following:

import ReactMarkdown from "react-markdown";

function MyRenderer({ markdown, onAnswer }) {

return (

<ReactMarkdown

components={{

code({ node, inline, className, children, ...props }) {

const lang = (className || "").replace("language-", "");

if (!inline && lang === "question") {

const def = JSON.parse(String(children))

return <MyQuestion def={def} onAnswer={onAnswer} />

}

return <code className={className}>{children}</code>;

}

}}

>

{markdown}

</ReactMarkdown>

);

}That's pretty much it! We catch the question block, parse the JSON, and pass it down to a question component. The onAnswer callback handles whatever side effect you want—in this instance, taking the response and feeding it back to the LLM.

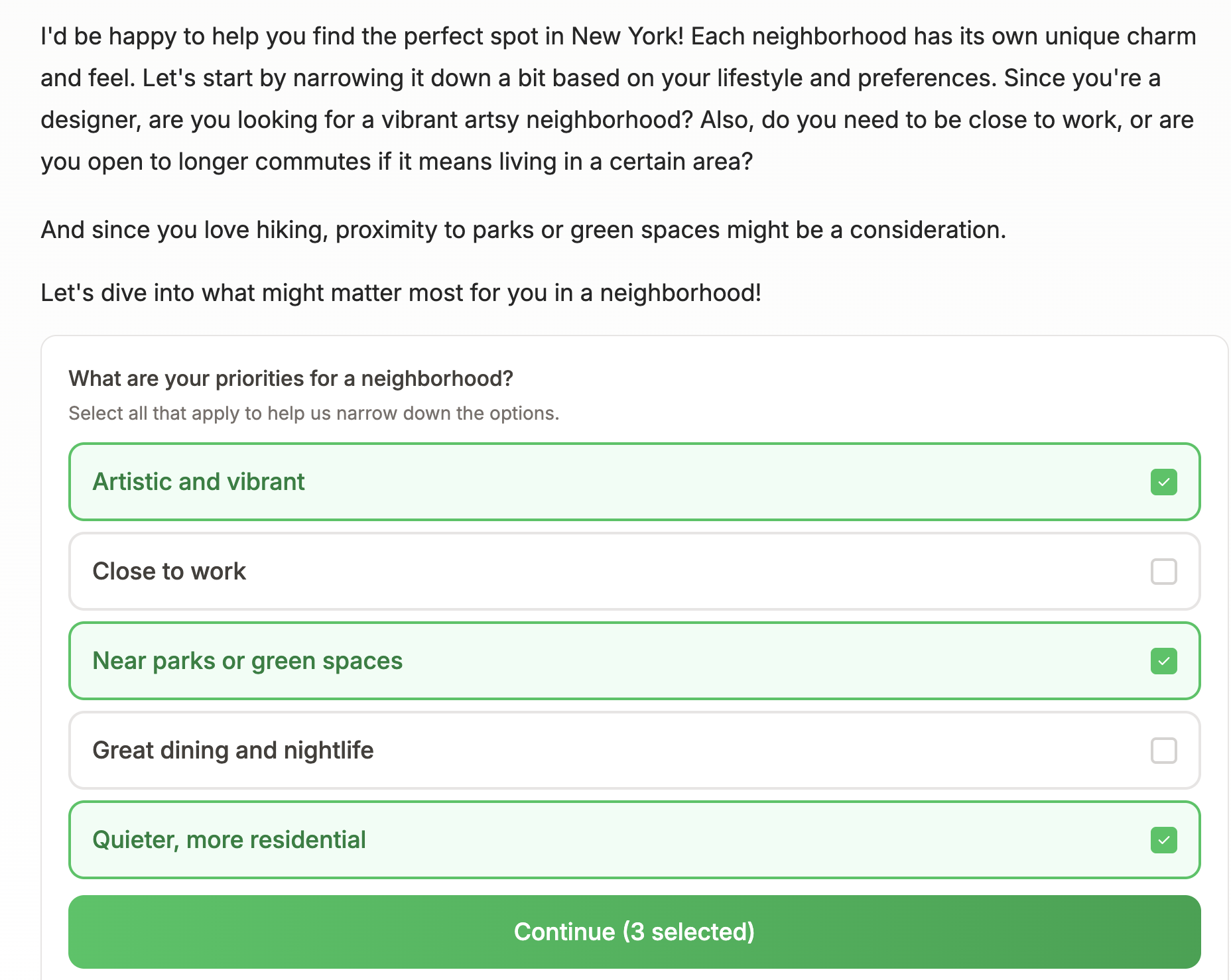

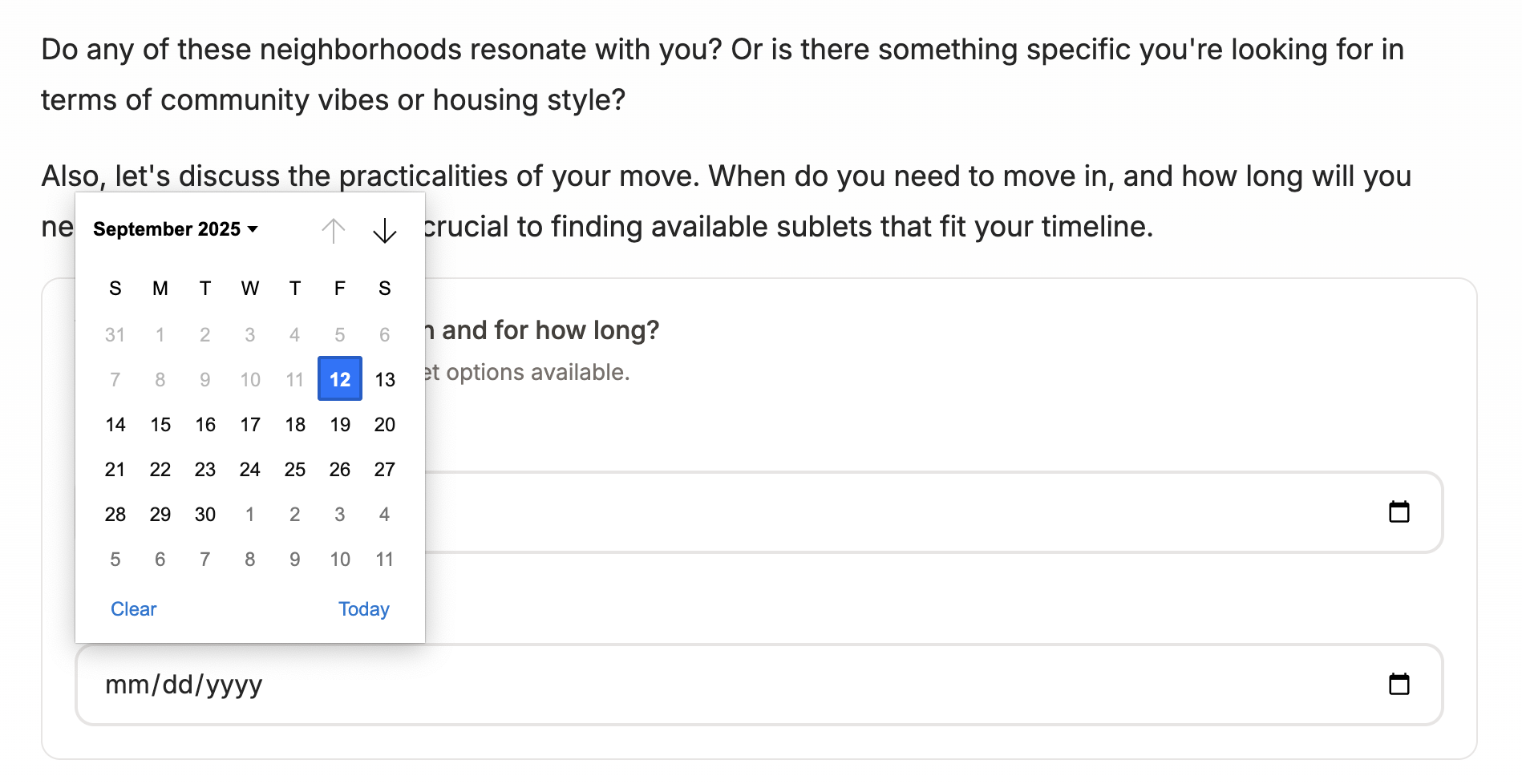

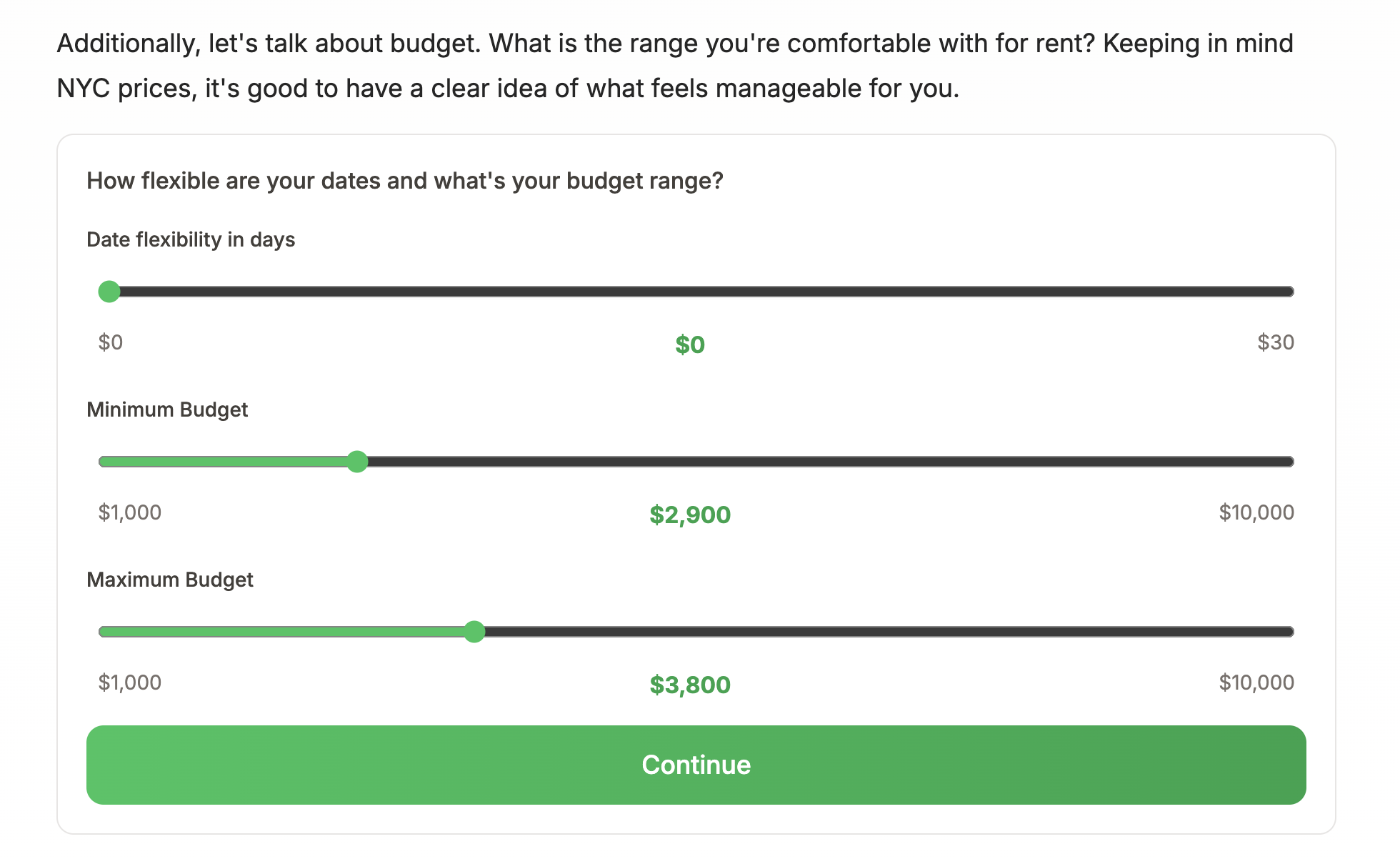

Here are a couple of examples of what it looks like in practice:

MCQ with multi-select

Date time picker

Sliders

There are so many other components you could add here. Some that come to mind are address auto-complete when answering "Where do you work?" or "What neighborhood do you live in?", availability calendars, image grids for choosing apartment styles, and so on.

I will admit that having an AI agent generate the structured questions instead of hardcoding them feels like the wrong software design pattern—trading strong consistency for a better human-like interaction. But overall I've been seeing good results so far, and nothing has really broken. Validations are in place to catch any major correctness issues—although, how much can you really mess up asking an MCQ?

The whole thing is a bit of a hack, but it works. Chat interfaces don't have to be just text fields and markdown output. By sneaking interactive components into the conversation flow, you can capture structured data without breaking the illusion of talking to someone who actually gets what you need. It's not revolutionary, but it makes the experience feel less like filling out forms and more like having a conversation. Sometimes, that's enough.